HDR Imaging and Tonemapping

The Problem§

The real world is in high dynamic range. A camera sensor is unable to capture in HDR; as bright spots may oversaturate a photosensor and force it to white – losing information in that area. We can modify our exposure time to adjust the amount of light that enters the sensor – but there is no one exposure time that will give us a good picture, so we must take many.

Many-to-one§

Camera Pipeline:

- 3D Radiance ($L = \frac{W}{srm^2}$)

- Lens

- 2D Sensor (Irradiance, $E = \frac{W}{m^2}$)

- Shutter (Exposure time, $\Delta t$)

- Sensor Exposure $H = E\Delta t$

- ADC (Analogue to Digital Converter)

- Raw Image

- Remapping

- Pixel Values ($Z$)

Our goal is to go from $Z$ to $E$, however, to fully go to $E$ we must reverse the ADC – we have no interest or intention of doing so. We can note that $E$ is invariant to exposure time, so if we know $\Delta t$ we can find $E$ – typically $\Delta t$ is given as metadata on an image.

We need multiple images to recover the $Z$ vs $H$ curve – typically with varying $\Delta t$.

Camera Response Curve§

Then, our goal is to find $E_i$ or the exposure sensitivity for the $i$th pixel.

Objective: Solve for $E_i$ in $Z_{i,j} = f(E_i\Delta t_j)$, where $Z_{i,j}$ represents the value of pixel $i$ on image $j$, and $\Delta t_j$ is the exposure time on the $j$th image.

Using algebra, we get $$ \begin{align*} f^{-1}(Z_{i,j}) &= E_i\Delta t_j\\ \log f^{-1}(Z_{i, j}) &= \log E_i + \log \Delta t_j\\ g(Z) &= \log \circ f^{-1} (z)\\ g(Z_{i, j}) &= \log E_i + \log \Delta t_j. \end{align*} $$

In the above expression, the values $Z_{i,j}$ and $\Delta t_j$ are known, and $g(Z)$ and $E_i$ are unknown.

Through optimization, we may solve for the unknown values by via the following $$ [g, E_i] = \argmin_{g,\ E_i} \sum_{i=1}^N\sum_{j=1}^P \left[g(z_{i,j}) - \log E_i - \log \Delta t_j\right]^{2}, $$ where $N$ is the number of pixels, $P$ the number of images.

Number of Samples

For an 8-bit color depth image, $Z_{i, j} \in [0, 255] \to 256$ values, then there are 256 unknowns for the function $g(Z)$, i.e. $g(0), g(1), \dots, g(255).$ We also have $N$ unknowns for $E_i$.

- There are $N + 256$ unknowns

- There are $NP$ total equations

Then, $NP > N + 256 \implies NP - N > 256 \implies N(P - 1) > 256 \implies N > \frac{256}{P-1}$. Usually $N = 5\left(\frac{256}{P-1}\right)$ is sufficient, e.g. if $P = 11$ then $N = 128$ random pixel samples is sufficient to recover $E$.

This makes the assumption that the images are reliable – however, this is not the case, we modify our equation to

$$ [g, E_i] = \argmin_{g,\ E_i} \sum_{i=1}^N\sum_{j=1}^P \Big[w(z_{i,j})\left(g(z_{i,j}) - \log E_i - \log \Delta t_j\right)\Big]^{2} + \lambda \sum_{z=0}^{2^b} \left[w(z)\frac{d^2}{dz^2}g(z)\right]^2, $$

- $w(z)$ is a function that assigns a weight to a pixel value; we want large $z$ values to map to smaller values, as these are generally unreliable. One possible function is the triangle function given by $$ w(z) = \begin{cases} z, &\text{ if } z \leq 2^b - 1\\ 2^b - (z + 1), &\text{otherwise} \end{cases} $$ where $b$ is the bit-depth of the image.

- There is no reason for a manufacturer to use a noisy curve; therefore, by making the assumption that the camera’s response curve is smooth, $$ \frac{d^2}{dz^2}g(z) \approxeq g(z - 1) - 2g(z) + g(z + 1) = 0. $$

- $\lambda$ is a factor used to balance the two terms – a larger $\lambda$ gives a smoother response curve.

We only need to perform this optimization once per camera, then we can reuse it for other images; sometimes this method is called “irradiance calibration”.

Tonemapping§

Previously we solved for $g: Z \to E, $ $g(Z_{i,j}) = \log E_i + \log \Delta t_j,$ or for every pixel we get $P$ estimates for $\log E_i,$ we cannot just use the arithmetic mean as the highly irradiant areas in longer exposures will bias the mean.

We can then reuse the weight function $w(Z)$ to get a weighted mean, $$ \log E_i \approxeq \frac{\sum_{j=1}^P w(Z_{i, j})\left[g(Z_{i,j}) - \log \Delta t_j\right]}{\sum_{j=1}^P w(Z_{i, j})}. $$

Having recovered the irradiance of each pixel in an image, we can now use it in various applications such as reusing the irradiance in a computer rendering or in viewing an image – however, we must compress the high dynamic range into the range displayable by a device.

- Clipping

- $I = \min (E, I)$

- Causes the loss of detail in highly irradiant areas.

- Scaling

- $I = \frac{E}{\max E}$

- Causes the loss of detail in areas of low irradiance.

- Reinhard Global Operator

- $I = \frac{E}{1 + E}$

- Better than scaling and clipping as it increasly sharply at small values (boosting), and compresses the high irradiance areas.

- Gamma Compression

- $I’’ = (I’)^\gamma, I’ = \frac{E}{\max E}$

- We first perform scaling, then a gamma correction to boost the smaller values like Reinhard.

- Colour gets washed out.

- Gamma Compression on Intensity

- Intensity is given by $L = \frac{1}{3}(R + G + B)$

- Then, gamma compression on intensity $L’’ = (L’)^\gamma, L’ = \frac{L}{\max L}$

- Colour is given by $C = \frac{1}{L}\left\{R, G, B\right\}$

- Then, the final image is computed via $I’’ = L’’ C$

- This preserves colour, but instead washes out intensity – or lowers the constrast of the image.

Chiu et. al (1993)§

In Chiu1, the authors propose the following method:

$$ I’ = I - (I \star f(x)) = I - J, $$

where $f(x)$ is the Gaussian function,

$$ f(x) = \frac{1}{\sqrt{2\pi}\sigma^2}e^{-\frac{x^2}{2\sigma^2}}. $$

Their process can be visualised by the following image,

Their motivation was to use a lowpass filter (here, we used the Gaussian as an illustration) to isolate ranges of radiance that are perceptually important.

Unfortunately, this approach leads to a “halo” effect around sharp irradiance transitions due to undesirable easing of a transition in a step-like function.

To understand why, we turn towards the definition of the convolution,

$$ J(p) = \sum_{q} f(p - q) I(q). $$

We may notice that $f(p - q)$ is a weighting on $I(q)$ that is dependent on the spatial distance between the two pixels $p$ and $q.$

Visually, we can see the effect that this spatial weighting has on the step-like result that we desire

Tomasi and Manduchi (1998)§

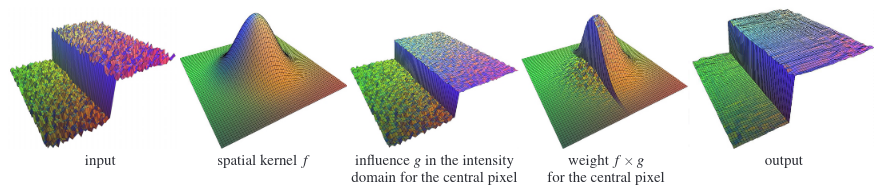

In Tomasi2, they explore a method called “bilateral filtering” to reduce the halo effect.

The method is given by the following adjustment the convolution calculation

$$ J(p) = \frac{1}{k(p)} \sum_q f_s(p - q) f_c (I(p) - I(q)) I(q), \tag{1a} $$

where

$$ k(p) = \sum_q f_s(p - q) f_c(I(p) - I(q)). \tag{1b} $$

The $f_s$ and $f_c$ functions represent the spatial and colour filters – now instead of weighting just the spatial distance of two pixels, we also use the “colour distance”.

Durand and Dorsey (2002); Paris and Durand (2006)§

In Durand3, they explore a method of computing an approximation of the bilateral filter that is $\mathcal{O}(1)$ per pixel.

A further improvement upon Durand3 was made in Paris4. The authors note that the product of the spatial and range Gaussian defines a higher dimensional Gaussian in the 3D product space between the domain and range of the image.

Visualization of the weights in the 3D product space in Durand3

However, the definition of the bilateral filter in eq. 1 is not a convolution as it is a summation over the 2D spatial domain. The authors introduce a new dimension $\zeta$ for the intensity for each point in the product space in order to define a summation of the 3D space.

We now define for each point $(x, y, \zeta)$ an intensity $I$, and we let $\mathcal{R}$ be the interval for which intensites are defined. Also, we define the Kronecker symbol $\delta(\zeta)$ by $\delta(0) = 1, \delta(\zeta) = 0.$

$$ J(p) = \frac{1}{k(p)} \sum_{q \in \mathcal{S}}\sum_{\zeta \in \mathcal{R}} f_s(||p - q||) f_c(|I(p) - \zeta|) \delta(\zeta - I(q)) I(q), $$

where

$$ k(p) = \sum_{q \in \mathcal{S}}\sum_{\zeta \in \mathcal{R}} f_s(||p - q||) f_c(|I(p) - \zeta|) \delta(\zeta - I(q)). $$

We can use homogeneous coordinates to express the same equation,

$$ \begin{pmatrix} W(p)J(p)\\ W(p) \end{pmatrix} = \sum_{q \in \mathcal{S}}\sum_{\zeta \in \mathcal{R}} f_s(||p - q||) f_c(I(p) - \zeta) \delta(\zeta - I(q)) \begin{pmatrix} W(q)I(q)\\ W(q) \end{pmatrix}, $$

where $W(q) = 1.$

Note that the $\delta(\zeta - I(q))$ terms are cancelled when $\zeta \neq I(q)$.

The summation is now over the space $\mathcal{S} \times \mathcal{R},$ the product $f_s f_c$ defines a separable Gaussian kernel $f_{s, c}$ on $\mathcal{S} \times \mathcal{R},$

$$ f_{s, c}: (x \in \mathcal{S}, \zeta \in \mathcal{R}) \to f_s(||x||) f_c(|\zeta|). $$

Then, we construct the functions $i$ and $w$,

$$ i: (x \in \mathcal{S}, \zeta \in \mathcal{R}) \to I(x), $$

$$ w: (x \in \mathcal{S}, \zeta \in \mathcal{R}) \to \delta(\zeta - I(x)). $$

Then, we rewrite the 3D summation

$$ \delta(\zeta - I(q)) \begin{pmatrix} W(q)I(q)\\ W(q) \end{pmatrix}= \begin{pmatrix} \delta(\zeta - I(q))W(q)I(q)\\ \delta(\zeta - I(q))W(q) \end{pmatrix}= \begin{pmatrix} w(q, \zeta)i(q, \zeta)\\ w(q, \zeta) \end{pmatrix}. $$

Using separability of the 3D Gaussian,

$$ \begin{pmatrix} W(p)J(p)\\ W(p) \end{pmatrix}= \sum_{(q, \zeta) \in \mathcal{S}\times\mathcal{R}} f_{s, c}(p - q, I(p) - \zeta) \begin{pmatrix} w(q, \zeta)i(q, \zeta)\\ w(q, \zeta) \end{pmatrix}. $$

This formula corresponds to the value of the convolution between $f_{s, c}$ and the two-dimensional function $(wi, w)$ at $(p, I(p))$,

$$ \begin{pmatrix} W(p)J(p)\\ W(p) \end{pmatrix}= \left[ g_{s, c} \otimes \begin{pmatrix} wi\\ w \end{pmatrix} \right] (p, I(p)). $$

The functions $i^b$ and $w^b$ are given by

$$ (w^b i^b, w^b) = g_{s, c} \otimes (wi, w). $$

Finally, the bilateral filter can be given by

$$ J(p) = \frac{w^b(p, I(p))i^b(p, I(p))}{w^b(p, I(p))}. $$

First, we perform slicing or evaluating $w^bi^b$ and $w^b$ at $(p, I(p)),$ then division in order to normalize the result.

K. Chiu, M. Herf, P Shirley, S. Swamy, C. Wang, K. Zimmerman. “Spatially Nonuniform Scaling Functions for High Contrast Images” (1993). ↩︎

C. Tomasi, R. Manduchi. “Bilateral Filtering for Gray and Color Images” (1998). ↩︎

F. Durand, J. Dorsey. “Fast Bilateral Filtering for the Display of High-Dynamic-Range Images” (2002). ↩︎ ↩︎ ↩︎

S. Paris, F. Durand. “A Fast Approximation of the Bilateral Filter using a Signal Processing Approach” (2006). ↩︎